ZStack Cloud Platform

Single Server, Free Trial for One Year

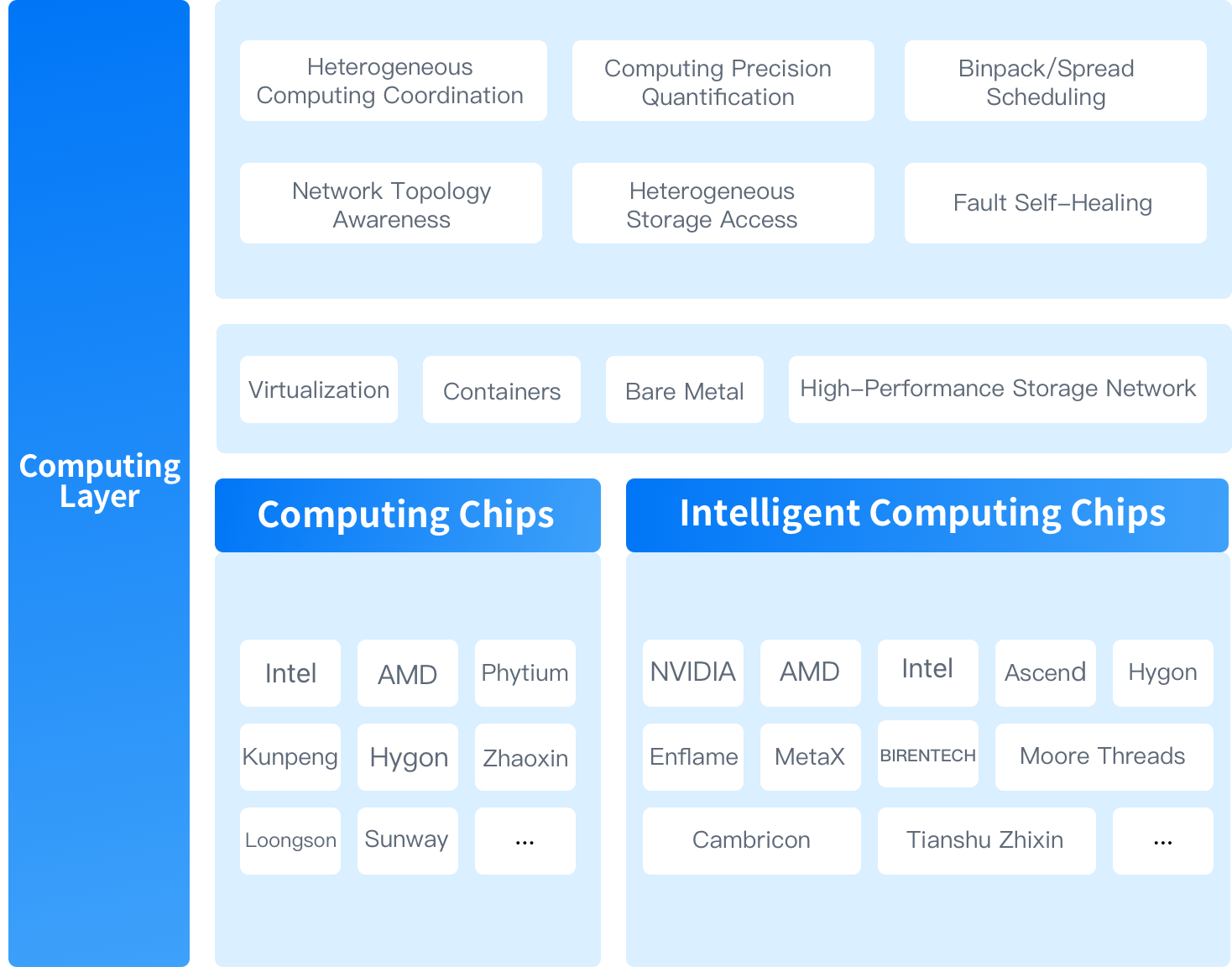

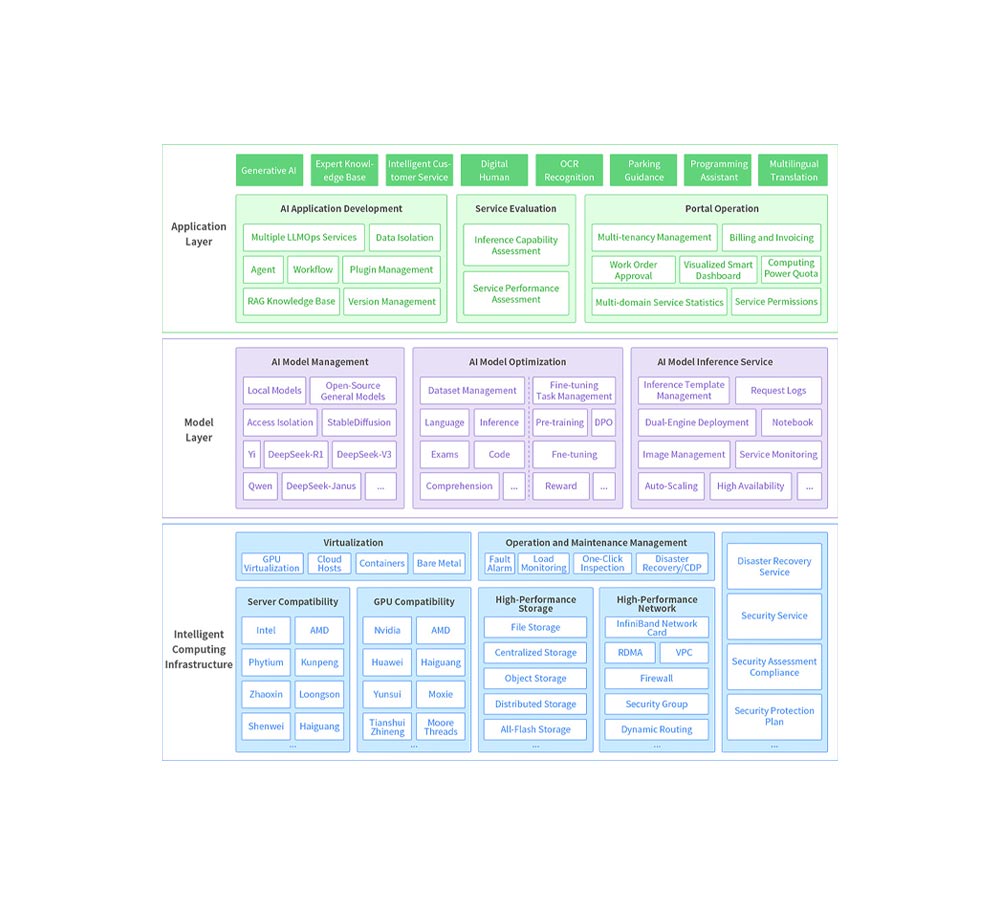

ZStack AIOS is a self-developed, productized, and standardized next-generation AI infrastructure operating system. Centered around “AI,” it facilitates AI innovation through three key layers: the computing power layer, the model layer, and the operational layer. It supports seamless upgrades from cloud platforms and is compatible with all cloud infrastructure module services, product documentation, and after-sales services.